Terraform resource move

Recently I was working on a Terraform project, creating infrastructure and doing deployment tasks. In the same time I was making modules, and tailoring modules for our use. Our customer needed Azure SQL Databases, and I edited a module I found to suit the current needs.

Databases created, module used, everyone happy for now.

Later I realized that my server/database module did not ensure a stable and predictable infrastructure state!

In this post I will try to account for the actions I had to perform for reaching my preferred end state; a more stable solution.

Preface

When creating resources in Terraform, you can choose to use a resource block, or you can use a module block. My limited experience with both has taught me that the choice of either is important for future infrastructure changes. More often than not, I was using the resource block, and was mostly satisfied with the outcome. My problem starts when I see potential for improvement, and want to do this in a modular and repeatable fashion. If the resource in question already has been created by Terraform using a resource block, you can’t just snap your fingers and change it to a module block. Also, if you have created a resource by using a module, and that module needs to be split into several modules, it will require some work. This was my current challenge. Databases and server created with a single module, but I wanted to split them into two different modules for re-usability and predictable infrastructure state.

My challenge

I was using a single module which created an Azure SQL Server and databases supplied in an array. This creates many different problems, but my main concern was with the predictability of database creation. The database information input supplied in a list of objects like this:

variable “databases” {

type = list(

description = “List of databases to deploy.”

object({

name = string,

sku_name = string,

license_type = string,

min_capacity = number,

auto_pause_delay = number,

collation = string,

max_size_gb = number,

tags = map(string),

}))

}

The first issue with this method of creating databases, is with default values on object strings. This has become an experimental preview functionality in Terraform 0.15, but we are currently on the latest 0.14, and do not want to utilize experimental features at the moment.

The result of this issue is that every setting on objects in the supplied database list must have a value. If not, the Terraform validation will fail.

The second issue with this method is the actual order of databases in the list. If I need to remove a database, add a database, or do any other changes to the order of the list, some unwanted changes will inevitably be necessary. I tried removing the first database in the list, and all of the other databases had to be replaced with the next one… The list order can be fixed, I am sure, but this was not the quick fix I was looking for. Something else had to be done.

The result of this issue is that every change in the list order, will cause unchanged database resources to be changed.

Another challenge with this module is that all future changes to database configuration is unnecessarily complex. If I, for example, wanted to add some optional setting, I would have to add this to the object configuration. Then I would have to explicitly set it for each database, even if it was the default setting. This is far from ideal, and not how modules are supposed to work.

Preparations for the fix

I had started to form a plan for fixing this, and it included creating a separate module for databases. That is the end state, however, and before getting there some advanced terraforming had to be done!

My experience with Terraform so far, has been with what I assume is the most commonly used, standard commands:

- terraform validate

- terraform plan

- terraform apply

- terraform destroy

To perform this module split without destroying and re-creating the databases in question, I would have to try a new kind of command - terraform state mv.

Another aspect to be aware of, is running devops pipeline vs local. This customer has an Azure DevOps Multi-Stage YAML Pipeline running validation, planning, and applying the Terraform config. For this fix I had to do a temporary local execution in my WSL2 Ubuntu 20.04. I have already installed the correct version of Terraform there. When running this locally, be sure to check the Terraform versions, as updating a remote Terraform 0.14.0 with Terraform 0.14.5 binary, can result in unwanted consequences.

I had to register the necessary environment variables, because Terraform does not support logging in to a service principal with az login. Only regular users logged in with az login can be utilized by Terraform for authentication.

Module creation

I began fixing this by creating a new module for sql databases. This is based on existing modules, and is not a complex module. Some logic and conditional expressions for serverless/provisioned and some logic for DTU vs vCore. Also some logic for Hybrid Benefit on Provisioned Azure SQL. All of this based on the azurerm_mssql_database resource and not the azurerm_sql_database (which I guess will be deprecated eventually).

The two resource types are different in that the mssql one supports serverless database types. I just recently started using the mssql resource, but so far it seems more suitable for new database deployments.

After the finished module was staged, committed, and pushed, I needed to tackle the actual resource move.

Moving the resources

To get a better understanding of how the remote state was built, and to have a backup for when I inevitably screwed up, I downloaded the remote state json. This lists all necessary information for your infrastructure in a comprehensive json file. You should never manually edit this, lest you desire ending up with a broken Terraform state! The Terraform state file also contains any key vault secrets you have exposed as data sources, so treat this as a sensitive file!

After I had gathered some relevant information from the state file, I wanted to do a dry run. I removed the first list item, and entered it as an iteration of my new module, with the same settings as before. Saved the tf-file, and did a terraform plan.

As expected, this resulted in major changes necessary. Several databases were replaced, some were destroyed, and all the databases diagnostic settings were either replaced or destroyed. Of course all planned changes, and nothing was applied. The remote state is refreshed when plan is run, but I think this is just fetching info from your actual infrastructure.

The databases in question, have been created by a Terraform count argument. This means, among other things, that the resources are grouped together in a collection. When referencing a single resource in the collection, we need to use an index number. These index numbers can be found in the Terraform state file, or by using terraform plan. This handles differently if resources were created by for_each argument.

From the plan output, I could see the actual resource IDs and index numbers of the databases. They are not dynamic, and if the database index number is [1], it will not change when you remove the [0] database from the state. At least this did not happen in my situation. When I had the necessary information, and did a few double takes to check that is was correct, I was ready to do the state move.

The state move is actually a pretty simple action. Do a terraform init, then a terraform plan to see what needs to change, then do terraform state mv ‘source’ ‘destination’. You can see some examples from Terraform here.

It will output “Successful! Moved 1 resource!”, or similar if the move succeeded.

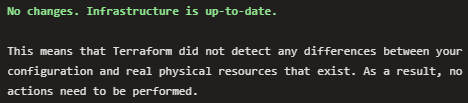

After this was done for all the databases and diagnostic settings, I could run “terraform plan” with the wanted result:

In conclusion

We can’t always look into the future and see what we will need at a certain point, and it is good that we have the option to modify our configurations. It is possible to move resources in Terraform state, and it is not very complex. As long as you plan the actions, and try to predict the outcomes, it is just a standard maintenance kind of thing. terraform plan will always notify you if changes are necessary, and you choose to apply the config only when you agree with the plan.

I have not provided the modules here, but the SQL module is a modification of this public module maintained by Kumaraswamy Vithanala. I ended up with two modules for this, one for SQL Server and one for SQL Database. Already I can see some further tweaks to the module, that possibly will require a new move.