Terraform vs ARM Template deployment

This will be a two part post, where the first is to set the stage for the next one. Together, these two posts will give some insight as to how a Terraform deployment differs from ARM Template deployment, and how you can deploy a self-hosted GitHub runner in Azure.

I will not do a broad comparison of ARM vs Terraform deployments. There are benefits and drawbacks to both, and in a future post I might to do some basic comparisons based on my experience.

ARM Templates vs Terraform

Before I dig into the how, I want to explain the why. ARM Templates and Terraform have somewhat different methods of deploying your resources.

ARM Templates

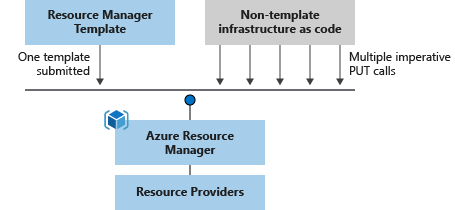

When an ARM Template is sent to the ARM API, every subsequent action is - somewhat simplified - performed from the inside. This means that the ARM Template is sent to Azure, and handled in the backend. No resource management commands are actually sent after the deployment has been started.

The process is visualized in this image from MS Docs:

Terraform

Terraform is written in the Go programming language, and works differently from ARM Templates when managing resources in Azure. Terraform will - also somewhat simplified - interpret your tf-files, and perform a series of small put requests to the ARM REST API (in parallel where possible).

This is also the case for other third party tools like Pulumi, Ansible, ++.

The challenge

When creating resources in Azure, you will most likely want to follow best practices. Azure network security best practices recommend that firewalls should be enabled on services where this is possible. This is not always necessary, but it is a good best practice to reduce the chance of unintentional data leakage.

If you deploy a firewalled resource with an ARM Template, everything works as expected. All is well. You can deploy this via template several times, and the end result will be the same.

If you try to deploy the same firewalled resource with Terraform, it will halfway succeed. The actual storage account creation will succeed. Any operations creating storage account container/fileshare, or reading key vault secret content, will fail.

This is because Terraform creates the actual resource in one of the early requests, then it enables the firewall in a separate request. Later it will try to create container/fileshare or read relevant secrets from a key vault, which probably leads to a “403 not authorized”. Terraform is cutting off the proverbial branch it is sitting on, effectively blocking itself from managing the data plane.

Possible solutions

This problem is possible to work around. The easiest and most reckless workaround is just not enabling the firewall at all. This removes the problem, but you will have to add exceptions to Azure Security Center to stay compliant. Not a recommended approach.

Instead I have outlined some more secure solutions below, described in Terraform for simplicity. The example is creation of a storage account, but the principles will also work with key vaults, or other control plane/data plane resource combinations.

Public IP Exception

Run Terraform from a known location and add your public IP address to the storage account IP rules.

resource “azurerm_resource_group” “example” { name = “example-resources” location = “norwayeast” }

resource “azurerm_storage_account” “example” { name = “storageaccountname” resource_group_name = azurerm_resource_group.example.name

location = azurerm_resource_group.example.location account_tier = “Standard” account_replication_type = “LRS”

network_rules { default_action = “Deny” ip_rules = [“12.13.14.15”] virtual_network_subnet_ids = [] } }

If you are running the Terraform executable on your own client, just check your public IP with minip.no, whatismyip.com, or PowerShell:

Invoke-RestMethod -Uri https://ipinfo.io/ip

Run from Azure

Run Terraform from a VM in Azure. Enable the service endpoint for storage services in the VM subnet, and add the subnet to virtual network rules on the storage account. This will enable connectivity from VM to storage account, even if the default network access rule is set to deny.

resource “azurerm_resource_group” “example” { name = “example-resources” location = “norwayeast” }

resource “azurerm_virtual_network” “example” { name = “virtnetname” address_space = [“10.0.0.0/16”] location = azurerm_resource_group.example.location resource_group_name = azurerm_resource_group.example.name }

The VM executing terraform must be connected to this subnet for connection to work

resource “azurerm_subnet” “example” { name = “vmsubnet” resource_group_name = azurerm_resource_group.example.name virtual_network_name = azurerm_virtual_network.example.name address_prefixes = [“10.0.2.0/24”] service_endpoints = [“Microsoft.Sql”, “Microsoft.Storage”, “Microsoft.KeyVault”] }

resource “azurerm_storage_account” “example” { name = “storageaccountname” resource_group_name = azurerm_resource_group.example.name

location = azurerm_resource_group.example.location account_tier = “Standard” account_replication_type = “LRS”

network_rules { default_action = “Deny” virtual_network_subnet_ids = [azurerm_subnet.example.id] } }

Deploy ARM Template with Terraform

Use Terraform together with ARM Template deployment. This will send an ARM Template to create file share or container. A module to enable this could easily be created, and you would not need to worry about your public IP being an exception in the firewall.

resource “azurerm_resource_group” “example” { name = “example-resources” location = “norwayeast” }

resource “azurerm_template_deployment” “arm-storageaccount” { name = “arm-storage-account-deployment” resource_group_name = azurerm_resource_group.example.name

template_body = file(“https://raw.githubusercontent.com/Azure/azure-quickstart-templates/master/quickstarts/microsoft.storage/storage-file-share/azuredeploy.json")

parameters = { “fileShareName” = “exampleshare” }

deployment_mode = “Incremental” }

Use Self-Hosted Agent

Both Azure DevOps Pipelines and GitHub Workflows allows the use of self-hosted agents. See here for Azure DevOps and here for GitHub. You can then host this agent wherever you like, and add the IP rule for the agents public IP to storage account.

Summary

This might not be a major issue, but it is a minor inconvenience. In my opinion, this is an example of Yak shaving

I’ll get to the storage account creation, once I have created this module, but before that I need to create a self-hosted agent, and before that I need to create a custom terraform docker image…

Everything written about ARM Templates in this post, also applies to Bicep.

In an upcoming post I will show you how to use a self-hosted GitHub runner in Azure with vnet integration. This is surprisingly easy, but hopefully you’ll stay tuned for my next post explaining it anyway! :-)