AKS GitHub-Runners

Last time I wrote about deploying a Self-Hosted GitHub-Runner locally on your own machine. This is obviously not a production grade way of running, but it was mostly for fun.

This post will give you an example of how it can be run in AKS. The AKS deployment is nothing special, but it will include ACR and a Key Vault for any other needs. AAD Pod Identity is great for accessing Key Vault from AKS, and will be included in Terraform when it is out of preview. I was planning on storing the Personal Access Token in Key Vault, but decided not to. The Key Vault stays, anyway. Trying to keep it somewhat simple.

Fair warning; This infrastructure will cost a few thousand NOK each month. Remember to clean up after you are finished testing/labbing! Most of the cost is from AKS, so you can delete the AKS cluster if you want to keep the rest.

Prerequisites

- Azure Account for resources

- Latest Az CLI installed on your client

- Latest Terraform installed on your client

- Kubectl installed on your client

- Can also be installed via az cli

Deploy AKS++

You can skip this step if you already have an infrastructure set up, and just want to see how the actual runner setup is done. This step is completed with Terraform, and will take some time to run.

- Clone the contents of this repository to download the files needed.

- Look over the tf-files, and do any necessary updates. I would recommend creating a tfvars-file when running this, so you can provide variable values more easily.

- Navigate your prompt to the terraform root folder.

- Log in an Azure Account with access to a subscription where you can deploy some temporary resources.

- Make sure you have chosen the correct subscription

- Initialize terraform

- Run through a plan with variable file or parameter

- Check that plan succeeds and creates the resources you want. Confirm that no resources are deleted.

- Run terraform apply with plan file

- terraform apply plan.tfplan

Code:

git clone https://github.com/torivara/tf-aks-github-runners.git cd tf-aks-github-runners cd manifests // Edit variables to your needs cd .. cd terraform

// Log in to Azure with an account that has an active subscription az login az account show az account set -s ‘subscription’ // Can be Subscription ID or name, doesn’t matter

// Initialize terraform folder terraform init

// Plan the terraform deployment with a variable file terraform plan –var-file=<yourvariablefile.tfvars> –out plan.tfplan

// Plan the terraform deployment with explicit parameters terraform plan -var=“tenant_id=12345-678910-111213” -var=“kubernetes_version=1.21.4” –out plan.tfplan

// Apply the terraform config if you are satisfied with the plan terraform apply “plan.tfplan”

This will deploy:

- Azure Kubernetes Service - For your Kubernetes workloads

- Azure Key Vault - For stashing your secrets

- Azure Container Registry - For storing your container images

- Azure Virtual Network - For connecting your AKS and Key Vault

- Azure Log Analytics Workspace - For logging purposes

- Azure Resource Groups - For containing your resources

- Tags on everything with values from your variables.tfvars

Import GitHub-Runner Docker image to ACR

MS states a best practice for using container images from a local container registry. I will use an Azure Container Registry in this case, and this is automatically deployed by using the supplied terraform files.

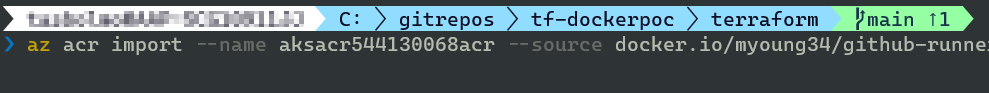

This is how you import myoung34/github-runner:latest to your Container Registry:

export REGISTRY_NAME=‘aksacr#########acr’ export IMAGE_REGISTRY=docker.io export IMAGE_NAME=myoung34/github-runner export IMAGE_TAG=latest

az acr import –name $REGISTRY_NAME –source $IMAGE_REGISTRY/$IMAGE_NAME:$IMAGE_TAG –image $IMAGE_NAME:$IMAGE_TAG

A few notes on import:

- I am using the latest version here. This should be pinned to a specific version of the runner for more serious deployments.

- The registry name must be changed to reflect your own.

- This import must happen every time there is a change in the remote image. Most likely by way of a CI/CD pipeline.

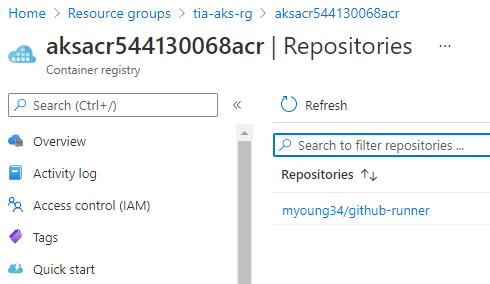

Use the script, or just copy the command. Once imported, you can see the image in your Container Registry:

Kubernetes deployment

When AKS is running, and the container image is imported to ACR, you can connect to the cluster.

Run the following command to connect to the cluster. This should work, assuming you have enough permissions on your cluster.

az aks get-credentials –resource-group YourResourceGroupName –name YourAksClusterName

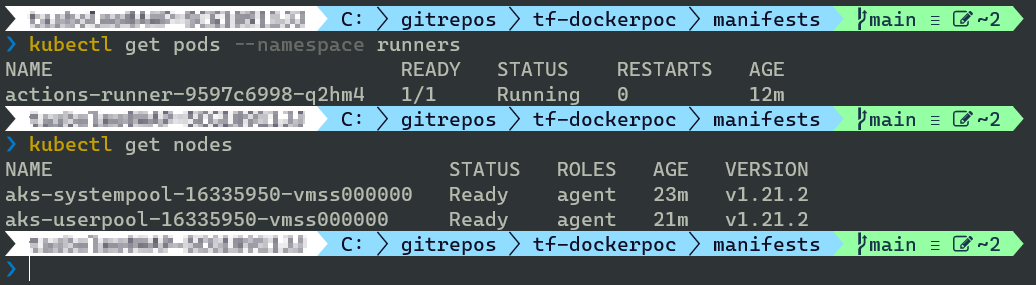

Check access to cluster with kubectl get nodes. You should see two node pools with one node each. One for system workloads and one for user workloads.

Find the secret.yaml.example in manifests folder. Put your Personal Access Token inside the secret template yaml file, and rename it secret.yaml. The .gitignore file on root will make sure it is not checked in to GitHub.

Run these commands to create namespace, create a secret, show the secret, and create the runner deployment:

kubectl create namespace runners

kubectl apply -f secret.yaml

kubectl get secret gh-runner -o yaml

kubectl apply -f runner.yaml

Check pods

To see how things went, and how many pods were created, you can use this command:

kubectl get pods –namespace runners

Your output should look similar to this:

Nice commands for troubleshooting:

// Get events for runners namespace kubectl get events –namespace runners // List all nodes kubectl get nodes // List pods for runners namespace kubectl get pods –namespace runners // Show logs for pod kubectl logs –namespace runners // List all pods in all namespaces kubectl get pods –all-namespaces // List all secrets in all namespaces kubectl get secrets –all-namespaces

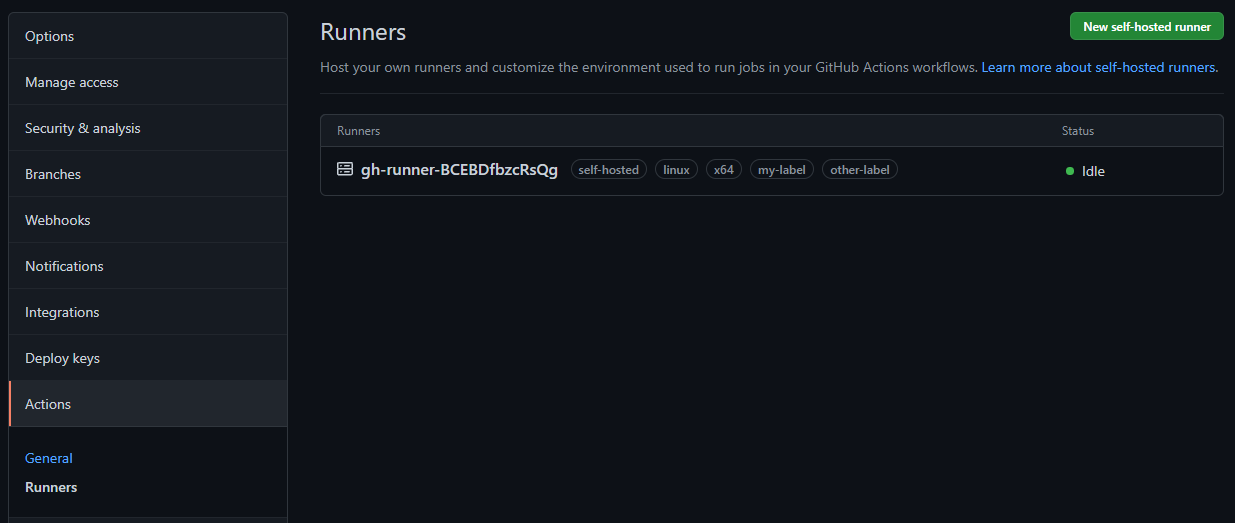

Runners should show up like this in your Repository -> Settings -> Actions -> Runners:

Next steps

More secure

To increase security further, you could use Enterprise Scale Landing Zones with hub and spoke. Then you create this setup in a spoke, and make sure your network traffic is routed through the Azure Firewall. You will have to manually allow certain fqdns, like docker.io, github.com, etc. because no traffic is allowed by default.

Scalable

To increse scalability, just research using autoscalers. This can both scale your node count, and your pod count. Could be useful if you run multiple demanding jobs, and want to always have resources available for more. Make sure you have a realistic max node count, so you don’t get financially DDOS’d.

Cost effective

If you know there will be no nightly builds/jobs, you can make sure the node count is lowest at night. You can even shut down all the nodes at night, and start them again early in the morning. Of course this assumes that you actually don’t need these runners at night. You can also run a bare minimum during nighttime, and increase the count in peak hours.

In summary

In this post I have shown you how to deploy GitHub Runners in your own AKS. I hope this post gave you some inspiration and information. Further improvements to both Terraform and Kubernetes config is required for it to be a long-term solution, but this will at least give you a start.

As always, leave a comment if you see errors or improvements!