Exploring Azure Terraform Authentication

TL:DR; Both the AzureRM provider and the Remote Backend (if applicable) require authentication. I recommend using Environment variables/Azure AD or OpenID Connect where possible in pipelines. Azure CLI should be used locally. Avoid plaintext secrets, and never commit any secrets to version control!

Background

The other day I was thinking about how I actually do authentication with Terraform to Azure. I have just been doing the same thing as blog post and guides, and never really thought much about how or why.

There has been some developments in authentication, like OIDC I wrote about earlier, and there are some traps to avoid. In this post I will try to explain Azure Terraform authentication, and give my two cents on how to do it.

The basics

When managing Azure infrastructure with Terraform you need to be aware of a few authentication mechanisms. The most important authentication, and the one you need for any Azure management scenario, is the provider authentication. This authentication will enable Terraform to make requests to the ARM API on behalf of a user or service principal with proper permissions.

If working in a team, and you are running Terraform in GitHub Actions, Azure DevOps Pipelines, or equivalent services, you need to handle a remotely stored state. This state will then reside in a remote backend (in my case always an Azure Storage Account), and requires some form of remote backend authentication.

It is these two authentication requirements I will outline and explain below. Terraform Cloud or Terraform Enterprise is not covered in this post, and frankly the pricing is weird for Terraform Cloud, so I haven’t done much with it outside of the free tier stuff.

Different authentications

I will assume that you have some basic knowledge about Terraform in this post, like what a Remote Backend or a provider is. If not, you can read up on most of this in Terraform Azure Getting Started.

Provider authentication

The AzureRM provider needs a way of authenticating to Azure. The Azure Provider, simply put, enables you to manage infrastructure in Microsoft Azure by leveraging API calls to the ARM REST API.

There are several ways of authenticating the provider, but the most important methods in my opinion are:

- Azure CLI

- Service Principal and Client Secret

- Service Principal and OpenID Connect (for lab and test atm)

Remote backend authentication

The AzureRM Remote Backend can store your Terraform state, and is crucial to keep away from prying eyes. Using a it is important if you are working on the infrastructure as a team, or if you are deploying from CI/CD.

There are several different ways of authenticating the AzureRM remote backend, but these are my go-to methods:

- Azure CLI or Service Principal

- Azure AD

- OpenID Connect (for lab and test atm)

What does Azure AD Auth mean?

I was wondering what this setting does, as it is not properly explained in the documentation. After some testing, it looks like the difference lies in how Terraform accesses the Storage Account. If you provide resource_group_name and use_azuread_auth is set to false, Terraform will actually list the access keys and store in memory (not in file). Then the access key will be used for authentication.

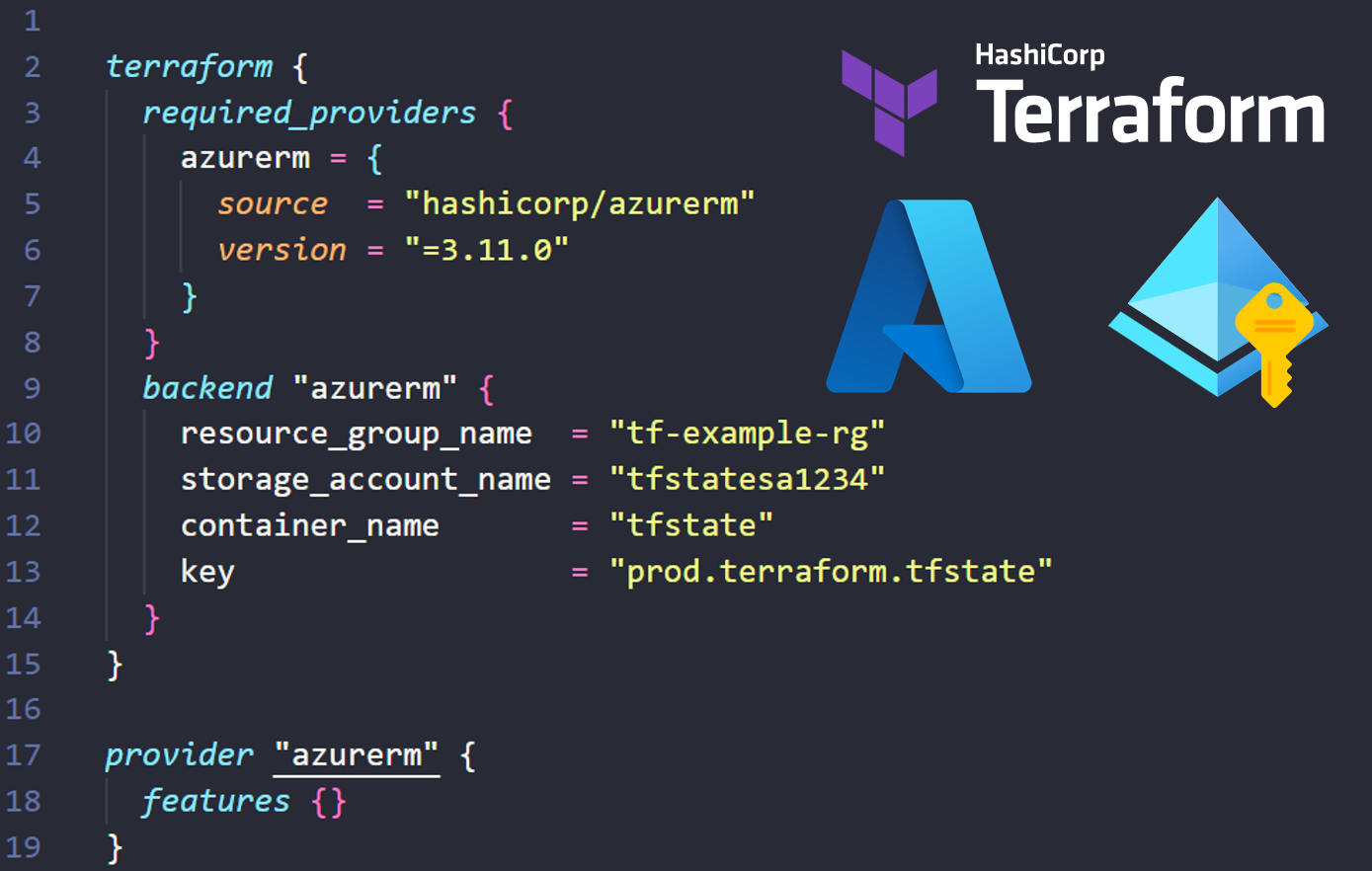

backend “azurerm” { resource_group_name = “tf-example-rg” storage_account_name = “tfstatesa1234” container_name = “tfstate” key = “prod.terraform.tfstate” use_azuread_auth = false # It is false by default }

If you remove the resource_group_name and use_azuread_auth is set to true, Terraform will not bother with Access Keys at all, and only use Azure AD directly. No keys are listed, downloaded, or used.

backend “azurerm” { storage_account_name = “tfstatesa1234” container_name = “tfstate” key = “prod.terraform.tfstate” use_azuread_auth = true }

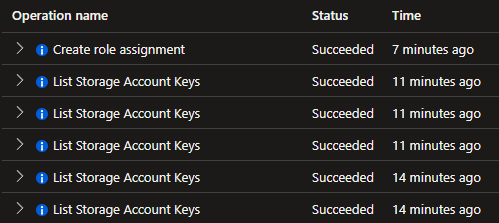

This is a snip from the activity log on my Remote Backend storage account:

The “List Storage Account Keys” are from before use_azuread_auth was enabled, and Terraform listed the keys when accessing the state file. After I created a role assignment and started using Azure AD Auth, the keys were not listed anymore.

Note that if using this access method on the Remote Backend, your user or service principal needs Storage Data Blob Owner permission on the container scope.

Using Azure AD Auth for Remote Backend seems to align better with RBAC and least privilege. I am certainly going to explore this a bit more going forward.

Running remotely (CI/CD)

When doing Terraform from any kind of workflows or pipelines, you need to authenticate remote backend and provider. Hosted GitHub runners will not persistently store your state file.

- Export the credentials to environment variables (in both Windows and Linux).

- ARM_XXX will automatically be picked up by Terraform and used for authentication.

- Access secrets from GitHub workflow

- Azure DevOps has a different connection to Azure, and will not require the same secrets. It supports secret variables, though, so you could do it in theory.

- Use OpenID Connect and environment variables combined

- You store client id, tenant id, and subscription id in ARM_XXX variables.

- GitHub Action will get a token and does not need a client secret.

- Still not mature enough for production use IMO, but will get there eventually.

Running locally

When running Terraform locally, you need to authenticate the provider, but remote backend only if configured. Terraform recommends using Az CLI locally, and this will be the simplest method.

- Authenticate with Az CLI before running your Terraform commands.

- This will enable you to authenticate with your user, but you need to choose the correct subscription.

- You need sufficient permissions with the user authenticated in Az CLI.

- Make sure you are authenticated to the correct tenant with ‘az account show’.

- Export ARM_XXX environment variables locally

- You can also use environment variables locally, but this is more complex and requires you to set these each time before running Terraform.

- This might expose your secrets in local history or console logs.

- You could use .env file, but this is risky for several different reasons.

Take care if you have access to multiple subscriptions/tenants with your user!

Managed Identities are not supported locally. The exception is, of course, if you run your client in Azure with a Managed Identity configured. You could also use an Arc-enabled server on-premises with managed identity. OpenID Connect does not work locally.

Personal recommendations

Some tips I have picked up during my run ins with Terraform IaC on Azure and mostly GitHub.

- Never store your secret credentials in plaintext, and use “partial configuration”.

- Never even use a .env file, as it is one step away from being checked in to source code.

- Even if your repository is private, you never know who will have access.

- Always try to support both remote (CI/CD) and local terraform init / terraform plan.

- Local plans simplify troubleshooting and development.

- It requires that users have more access with their accounts, and you will lose control over where your Terraform state files are downloaded to.

- Avoid using Access Keys or SAS tokens for accessing Remote Backend, as it is limiting and can be risky.

- Might make running local Terraform plans difficult, as keys would then be needed locally.

- Split service principals where possible.

- Don’t use a “master service principal” with Owner access on the root management group.

- Applying least privilege principle can further enhance environment isolation (dev/test/prod) and reduce misconfiguration blast radius.

- OpenID Connect (The end of client secrets?) removes the need to rotate secrets, and lessens the administrative burden.

- Use environment variables for sourcing ARM info.

- Use GitHub Secrets (if GitHub is your DevOps tool of choice) or equivalent for secrets storage.

- Using these tools will generally prevent secrets from getting into logs, or printed to screen by accident.

- Don’t use the “generic” AZURE_CREDENTIALS and login-action method if avoidable.

- There are better alternatives, like storing credentials in environment variables or OpenID Connect.

Some examples

The most basic auth method

- Provider authenticated with Azure CLI manually before running Terraform commands.

- Example code here.

Azure AD Auth for remote backend (one of my preferred ways)

- Enabling Azure AD Auth will change the way Terraform accesses the Remote Backend storage account. It’s explained above, so I don’t think the details need repeating. Identity running Terraform needs Storage Blob Data Owner on container.

- Example code here.

OpenID Connect (one of my preferred ways)

- Reduces administrative overhead of secrets management.

- Will only work from GitHub Workflow.

- Requires at least Terraform version 1.2.0, and AzureRM provider 3.7.0.

- Example code here.

Not recommended, but here for example’s sake

- This would be a somewhat dangerous and insecure way of authenticating, and should be avoided. At least put the client secret in a variable.

- Example code here.

⚠️⚠️ Be advised that the example above is considered very bad practice! Never commit secrets into version control! ⚠️⚠️

Multiple providers, single remote backend

- This would be a slightly more advanced way of authenticating with several providers on different subscriptions. Azure Landing Zone type of deployment could be authenticated this way 😎

- Example code here.

In summary

I hope this post provided some useful insights on the different authentication mechanisms for Terraform in Azure, and that it also explained provider vs remote backend authentication.

In doing research for this post I also did some use_azuread_auth testing, and this will help me understand it and its implications better going forward.

Please don’t hesitate to comment if there is anything wrong or inaccurate 🙂

There is always room for improvement.